As you read this remember that the technology is actually way ahead of what the public knows by years to decades consequently the threat is much larger. This article came from the Army although it claims the familiar disclaimer. If the military sees a dark side to technology, what is the actually gravity to the threat of technology to each of us?

This is a very informative article. The only thing that I would have added is to preserve our humanity in addition to the noted humility.

Use your critical thinking skills and dive in. Celeste

REPOST

[Editor's Note: In Part I of this series, Dr. Nick Marsella addressed the duty we have to examine our assumptions about emergent warfighting technologies / capabilities and their associated implications to identify potential second / third order and "evil" effects. In today's post, he prescribes five actions we can embrace both individually and as organizations to avoid confirmation bias and falling into cognitive thinking traps when confronting the ramifications of emergent technologies — Enjoy!]

In part I of my blog post, I advised those advocating for the development and/or fielding of technology to be mindful of the second/third order and possible “evil” effects. I defined “evil” as an unexpected and profound negative implication(s) of the adoption/adaption of a technology and related policy. I also recommended heeding the admonition of Tim Cook, Apple's CEO, to take responsibility for our technological creations and have the courage to think things through their potential implications – both for the good and the bad.

But even if we as individuals are willing to challenge our assumptions and think broadly and imaginatively, we can still come up short. Why? The answer is due in part to the challenges of prediction and the fact we are human with both great cognitive abilities, but also weaknesses.1

THE CHALLENGES

First, common sense and many theorists remind us that the future is unknowable.2 The futurist Arthur C. Clark noted in 1962, “It is impossible to predict the future, and all attempts to do in any detail appear ludicrous within a very few years.”3 Within this fixed condition of unknowing, the U.S. Departments of Defense and the Army must make choices to determine:

- what capabilities we desire and its related technology;

- where to invest in research and development; and

- what to purchase and field.

Source: Penguin Random House

As Michael E. Raynor pointed out in his book, The Strategy Paradox, making choices and developing strategies, which include adopting new technology, where the future is unknowable and “deeply unpredictable” either produces monumental successes or monumental failures.4 For a company this can spell financial ruin, while for the Army, it can spell disaster and the loss of Soldiers' lives and national treasure. Yet, we must also choose a “way ahead” – often by considering “boundaries” – in a sense the left and right limits – of potential implications.

Second, our ability to predict when technology will become available is often in error nor can we accurately determine its implications. For example, in a New York Times editorial on December 8, 1903, it noted: “A man carrying airplane will eventually be built, but only if mathematicians and engineers work steadily for the next ten million years.”5 The Wright Brothers first flight took place the following week, but it took decades for commercial and military aviation to evolve.

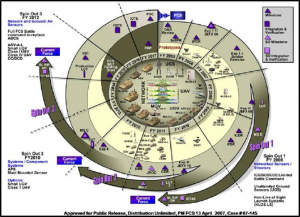

The stillborn Future Combat Systems (FCS) was the U.S. Army's principal modernization program during the first decade of the Twenty-First century / Source:

PM FCS, U.S. Army

Each of the services has faced force modernization challenges due to the adoption of technologies that weren’t mature enough; whose capabilities were over-promised; or did not support operations in the envisioned Operational Environment. For the U.S. Army, Future Combat Systems (FCS) was the most obvious and recent example of this challenge – costing billions of dollars and, just as importantly, lost time and confidence.6

Third, in terms of future technology, predictions vary widely. For example, predictions by MIT’s Rodney Brooks and futurist/inventor Ray Kurzweil vary widely to the question – "What year do you think human-level Artificial Intelligence [AI, i.e., a true thinking machine] might be achieved with a 50% probability?" Kurzweil predicts AI (as defined) will be available in 2029, while Brooks is a bit more conservative – the year 2200 – a difference of over 170 years.7

While one can understand the difficulty of predicting when “general AI” will be available, other technologies offer similar difficulties. The vision of the “self-driving” autonomous vehicle was first written about and experimented with in the 1920s, yet technological limitations, legal concerns, customer fears, and other barriers make implementing this capability challenging.

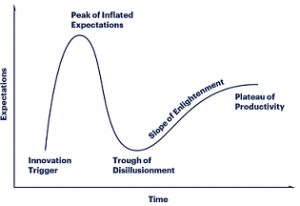

Gartner Hype Cycle / Source: Nicole Saraco Loddo, Gartner

Lastly, as Rodney Brooks has highlighted, Amara’s Law maintains:

“we tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.”8

SECOND, THIRD ORDER AND EVIL EFFECTS

I contend that we either:

- intentionally focus on the positive effects of adopting a technology;

- ignore the experts and those who identify the potential downside of technology; and

- fail to think thorough the short, long, and potentially dangerous effects or dependencies - imaginatively.

We do this because we are human, and more often because we fall into the confirmation bias or other cognitive thinking traps that might affect our program or pet technology. As noted by RAND in Truth Decay:

Source: Pixabay

“Cognitive biases and the ways in which human beings process information and make decisions cause people to look for information, opinion, and analyses that confirm preexisting beliefs, to weigh experience more heavily than data and facts, and then to rely on mental shortcuts and the beliefs of those in the same social networks when forming opinions and making decisions.”9

Yet, the warning signs or signals for many unanticipated effects can be found simply by conducting a literature review of government, think tank, academic, and popular reports (to perhaps include science fiction). This may deter some, given the sheer volume of literature is often overwhelming, but contained within these reports are many of the challenges associated with future technology, such as: the potential for deep fakes, loss of privacy, hacking, accidents, deception, and loss of industries/jobs.

At the national level, both the current and previous administrations published reports on AI, as has the Department of Defense – some more balanced in addressing of issues of fairness, safety, governance, and ethics.10

Source: Pixabay

For example, using photo recognition to help law enforcement and others seems like a great idea, yet its use is increasingly being questioned. This past May, San Francisco became the first city to ban its use, and others may follow suit.11 While some question this decision to ban vice impose a moratorium until the technology produces less errors, the question remains – did the developers and policy makers consider if there would be push back to its introduction given its current performance? What sources did they consult?

Another challenge is language. You’ve seen the commercials – “there is much we can do with AI” – in farming, business, and even beer making. While “AI” is a clever tag line, it may invoke unnecessary fear and misunderstanding. For example, I believe for most people equate “robotics" and "AI” with the “Terminator” franchise, raising the fear of uncontrolled autonomous military force overwhelming humanity. Even worse – under the moniker of “AI,” we will buy into “snake-oil” propositions.

Another challenge is language. You’ve seen the commercials – “there is much we can do with AI” – in farming, business, and even beer making. While “AI” is a clever tag line, it may invoke unnecessary fear and misunderstanding. For example, I believe for most people equate “robotics" and "AI” with the “Terminator” franchise, raising the fear of uncontrolled autonomous military force overwhelming humanity. Even worse – under the moniker of “AI,” we will buy into “snake-oil” propositions.

We are at least decades away from “general AI” where a “system exhibits apparently intelligence behavior at least as advanced as a person" across the “full range of cognitive tasks,” but we continue to flaunt the use of “AI” without elaboration.12

SOME ACTIONS TO TAKE

Embrace a learning organization. Popularized by Peter Senge’s The Fifth Discipline: The Art and Practice of the Learning Organization (1990), a learning organization is a place “where people continually expand their capacity to create the results they truly desire, where new and expansive patterns of thinking are nurtured, where collective aspiration is set free, and where people are continually learning how to learn together.”13 While some question whether we can truly make a learning organization, Senge’s thinking may help solve our problem of identifying 2nd/3rd order and evil effects.

First, we must embrace new and expansive patterns of thinking. Force developers use the acronym – “DOTMPLF-P” as shorthand for Doctrine, Organization, Training, Materiel, Personnel, Leadership, Facilities, and Policy implications – yet other factors might be as important.

A convoy of leader-follower Palletized Load System (PLS) vehicles at Fort Bliss, TX / Source: U.S. Army photo by Jerome Aliotta

For example, trust in automated or robotics systems must be instilled and measured. In one poll, 73% of respondents noted they would be afraid of riding in a fully autonomous vehicle – up 10% points from the previous year.14 What if we develop a military autonomous vehicle or a leader-follower system and one or more of our host nation allies prohibits its use? What happens if an accident occurs and the host population protests, arguing that the Americans don’t care for their safety?

Technologists and concept developers must move from being stove-piped in labs and single-focused to consider the broader view of technological implications across functions.

Embrace Humility. Given that we cannot predict the future and its potential consequences, developers must seek out diversified opinions, and more importantly, recognize divergent opinions.

U.S. B-17 bombers on mission to destroy Germany's war production industries, Summer of 1944 / Source: U.S. Air Force photo

Embrace the Data. In New York City during World War II, a small organization of some of the most brilliant minds in America worked in the office of the “Applied Mathematics Panel.” In Europe, the 8th U.S. Air Force suffered from devastating bomber losses. Leaders wondered where best to reinforce the bombers with additional armor without significantly increasing their weight to improve survivability. Reports indicated returning bombers suffered gunfire hits over the wings and fuselage, but not in the tail or cockpits, so to the casual observer it made sense to reinforce the wings and fuselage where the damage was clearly visible.

Abraham Wald, a mathematician, worked on the problem. He noted that reinforcing the spots where damage was evident in returning aircraft wasn’t necessarily the best course of action, given that hits to the cockpit and tail were probably causing aircraft (and aircrew) losses during the mission. In a series of memorandums, he developed a “method of estimating plane vulnerability based on damage of survivors.”15 As Sir Arthur Conan Doyle's Sherlock Holmes noted more than 100 years ago, “It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts."16

Additionally, we need to reinforce our practice of performing quality literature reviews to aid in our identification of these issues.

The office of Devil's Advocate was established by Pope Sixtus V in 1587 / Source: Wikimedia Commons, Artist unknown

Employ Skepticism and a Devil’s Advocate Approach. In 1587, the Roman Catholic Church created the position of Promoter of the Faith – commonly referred to as the Devil’s Advocate. To insure a candidate for sainthood met the qualification of being canonized, the role of the Devil’s Advocate was to be the skeptic (loyal to the institution), looking for reasons why not to canonize the individual. Similarly, Leaders must embrace the concept of employing a devil’s advocate approach to identifying 2nd/3rd order and evil effects of technology - if not designating a devil’s advocate with the right expertise to identify assumptions, risks, and effects. But creating any devil’s advocate or “risk identification” positions is only useful if their input is seriously considered.

Embrace the Suck. Lastly, if we are serious in identifying the 2nd/3rd order and evil effects, to include identifying new dependencies, ethical issues, or other issues which challenge the adaption or development of a new technology or policy – then we must be honest, resilient, and tough. There will be setbacks, delays, and frustration.

FINAL THOUGHT

Technology holds the power to improve and change our personal and professional lives. For the military, technology changes the ways wars are fought (i.e., the character of war), but it may also change the nature of war by potentially reducing the “human dimension” in war. If robotics, AI, and other technological developments eliminate “danger, physical exertion, intelligence and friction,”17 is war easier to wage? Will technology increase its potential frequency? Will technology create a further separation between the military and the population by decreasing the number of personnel needed? These may be some of the 2nd/3rd order and “evil” effects we need to consider.

If you enjoyed this post, please see Dr. Marsella's previous post “Second/Third Order, and Evil Effects” – The Dark Side of Technology (Part I)

... as well as the following posts:

- Man-Machine Rules, by Dr. Nir Buras

- An Appropriate Level of Trust

Dr. Nick Marsella is a retired Army Colonel and is currently a Department of the Army civilian serving as the Devil’s Advocate/Red Team for the U.S. Army’s Training and Doctrine Command.

___________________________________________________________

This article is dedicated to my faithful Patron's who make my writings possible. I have transitioned from my farming enterprise to full time writing. I no longer carry products. The best way to support my work is through: Patreon or PayPal

If you would like to purchase individual briefings to share with friends and family here is the link.

Author Information

For those who are actively pray for my ministry I humbly want to thank each one of you!

If you consider this article informative please consider becoming a Patron to support my work.

Going where angels fear to tread...

Celeste has worked as a contractor for Homeland Security and FEMA. Her training and activation's include the infamous day of 911, flood and earthquake operations, mass casualty exercises, and numerous other operations. Celeste is FEMA certified and has completed the Professional Development Emergency Management Series.

- Train-the-Trainer

- Incident Command

- Integrated EM: Preparedness, Response, Recovery, Mitigation

- Emergency Plan Design including all Emergency Support Functions

- Principles of Emergency Management

- Developing Volunteer Resources

- Emergency Planning and Development

- Leadership and Influence, Decision Making in Crisis

- Exercise Design and Evaluation

- Public Assistance Applications

- Emergency Operations Interface

- Public Information Officer

- Flood Fight Operations

- Domestic Preparedness for Weapons of Mass Destruction

- Incident Command (ICS-NIMS)

- Multi-Hazards for Schools

- Rapid Evaluation of Structures-Earthquakes

- Weather Spotter for National Weather Service

- Logistics, Operations, Communications

- Community Emergency Response Team Leader

- Behavior Recognition

And more….

Celeste grew up in a military & governmental home with her father working for the Naval Warfare Center, and later as Assistant Director for Public Lands and Natural Resources, in both Washington State and California.

Celeste also has training and expertise in small agricultural lobbying, Integrative/Functional Medicine, asymmetrical and symmetrical warfare, and Organic Farming.

I am inviting you to become a Shepherds Heart Patron and Partner.

My passions are:

- A life of faith (emunah)

- Real News

- Healthy Living

Please consider supporting the products that I make and endorse for a healthy life just for you! Or, for as little as $1 a month, you can support the work that God has called me to do while caring for the widow. This is your opportunity to get to know me better, stay in touch, and show your support. More about Celeste

We live in a day and age that it is critical to be:

- Spiritually prepared,

- Purity in food and water can

Fair Use Act Disclaimer This site is for educational purposes only. Fair Use Copyright Disclaimer under section 107 of the Copyright Act of 1976, allowance is made for “fair use” for purposes such as criticism, comment, news reporting, teaching, scholarship, education and research.

Fair use is a use permitted by copyright statute that might otherwise be infringing.

Fair Use Definition Fair use is a doctrine in United States copyright law that allows limited use of copyrighted material without requiring permission from the rights holders, such as commentary, criticism, news reporting, research, teaching or scholarship. It provides for the legal, non-licensed citation or incorporation of copyrighted material in another author’s work under a four-factor balancing test.